Note

Recommended to read PART-2.

Introduction

With growing popularity of your website, multiple users are now visiting your website amazinglyme.com but you only have one server on your end. With thousands of requests coming in, the server’s CPU is not enough to process these many requests, and as a result, users will face errors or very slow response. Let’s address this issue.

Scaling the server

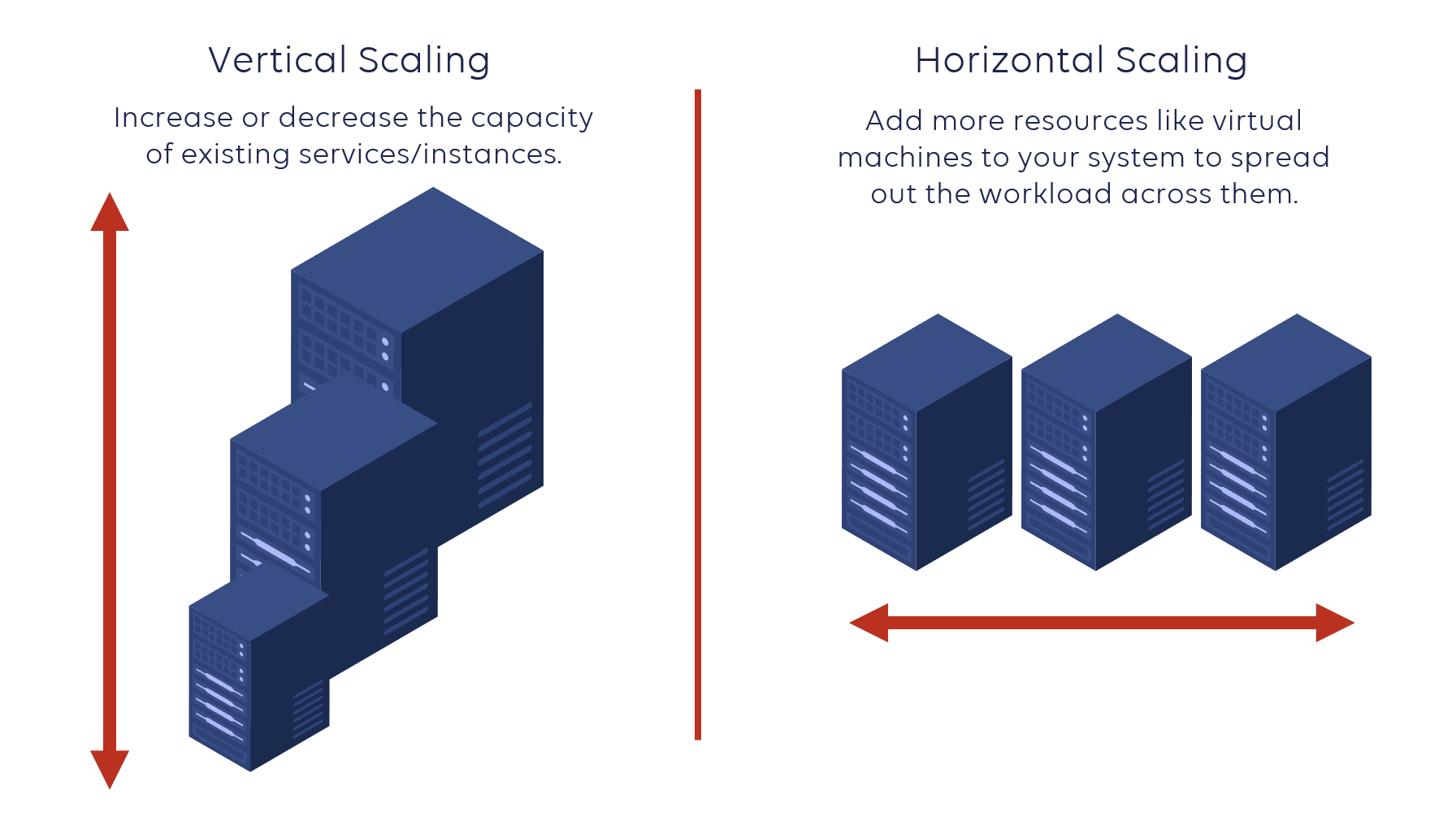

Now we are going to scale the web server. This can be done in two ways —

Vertical Scaling (scale-up)

If the server is not enough to serve X number of requests, we can always bring out a bigger server which has a faster processing power. Since we are increasing the server size, it is also called scaling up (vertical scaling). However, there are some disadvantages —

- Limitation of scaling: There is a limit to how much you can scale in this case if the requests keep on increasing.

- Cost of scaling: Replacing a bigger server is always expensive because there is more hardware involved which you are paying for (more RAM and Memory)

- No Failover: No matter how big server we can have, if it fails, there is no other option to serve the requests and the entire system is down. This will naturally impact the business as the users will not be able to access the website.

Failover means a procedure by which a system automatically transfers control to a duplicate system when it detects a fault or failure in the main system.

- Inefficient Resource Utilisation: Imagine if you bring a big server but load of the requests has reduced drastically. The server is now serving the amount of load which could have been served by a smaller server but since we are stuck with this big server, we are unable to utilise the server to its maximum capacity.

Horizontal Scaling (scale-out)

If the current server is unable to serve X number of requests, one way to solve is to bring another duplicate server which can share the load. Now we have 2 servers where each can serve X/2 requests therefore preventing over-loading on one server. Since we are sharing out the load over multiple servers, this is called scale-out (horizontal scaling).

Advantages of horizontal scaling —

- Unlimited Scaling: This scaling technique allows us to keep adding more number of servers and reducing the load.

- Cost of Scaling: Since we are only adding more servers of the same capacity, it costs less than replacing with a bigger server.

- Failover: Now we do have a failover because if one of the server has failed, the traffic on that server can be directed to the other server so that the users do not face any downtime or outage.

- Better Resource Utilisation: We can now use multiple algorithms to auto-scale based on the traffic. If the traffic is very low, we can switch off some servers and as soon as it increases, we can add more servers.

How does Horizontal Scaling work in the architecture?

In our basic client-server architecture with single server in PART-2, we saw that the client calls ‘amazinglyme.com’, and the request goes to the DNS to resolve domain name to IP address. DNS then resolves the domain name to the IP address of the web server.

But if we perform horizontal scaling and we increase the number of servers, which IP address should DNS resolve to, and return for ‘amazinglyme.com’ ? Or to which server should the client request be directed to?

In order to address this, we need a component which can take the incoming requests, decide where to forward the request (to which server) and then send that request to the dedicated server. This component is called ‘Load Balancer.’

Load Balancer

A load balancer is a device (hardware load balancer) or an application that can function like a load balancer installed on any server (software load balancer), which sits between the user incoming requests and the web servers. It routes the incoming traffic and directs them to one of the server based on some logic. To read more on balancers, see link.

Let’s see how our architecture changes after we add load balancers and more web servers —

In the above diagram —

- User enters the website amazinglyme.com and presses send.

- The request goes to the DNS to resolve the domain to IP address.

- DNS resolves the domain to Load Balancer’s IP which is a public IP and returns back to the client.

- The client will send the request to the IP of the load balancer.

- The load balancer receives the request and based on how it is configured, it redirects the request to Server N.

- The web server receives the request and reads the website data from the database (and also may some write data to the database), and then sends response back to the client’s system via load balancer.

- Earlier the request was directly sent to the web server because the web server had a public IP and the DNS had the server’s IP.

- With load balancer in the picture, the web servers are not publicly exposed and can only be accessed through load balancer. This prevents any direct attack on the web servers.

- No request directly goes to the web server which are hosted with private IP address.

Now our web-tier looks in good shape on scaling, let’s see how we can enhance data-tier next. See you in part-4.